Welcome back to our Performance Tuning Series. In the previous post, we discussed Indexing Strategies which is a key component in having optimal query performance. We’ll continue in the series with a post on writing efficient SQL to expand on even better performance for your SQL Server queries.

Optimizing SQL queries is at the heart of database performance. Even with the best hardware and properly indexed tables, poorly written queries can slow down your SQL Server. Efficient SQL writing isn’t just about getting the right results; it’s about getting them quickly and with minimal resource consumption. Here’s how you can write SQL queries that are both efficient and effective.

Key Principles of Query Optimization

- Understand SQL Server Execution Plans:

- An execution plan is a roadmap of how SQL Server will execute a query. It shows the steps taken to retrieve the data, including the order of operations, the use of indexes, and the estimated cost of each operation.

- Use the “Include Actual Execution Plan” feature in SQL Server Management Studio (SSMS) to analyze how SQL Server processes your queries. Look for signs of inefficiency such as table scans (where the server reads every row in a table) and key lookups (which occur when a non-clustered index doesn’t cover all the columns needed by the query).

- Pay attention to the “cost” associated with each step in the execution plan. High-cost operations are potential areas for optimization, such as converting table scans into index seeks.

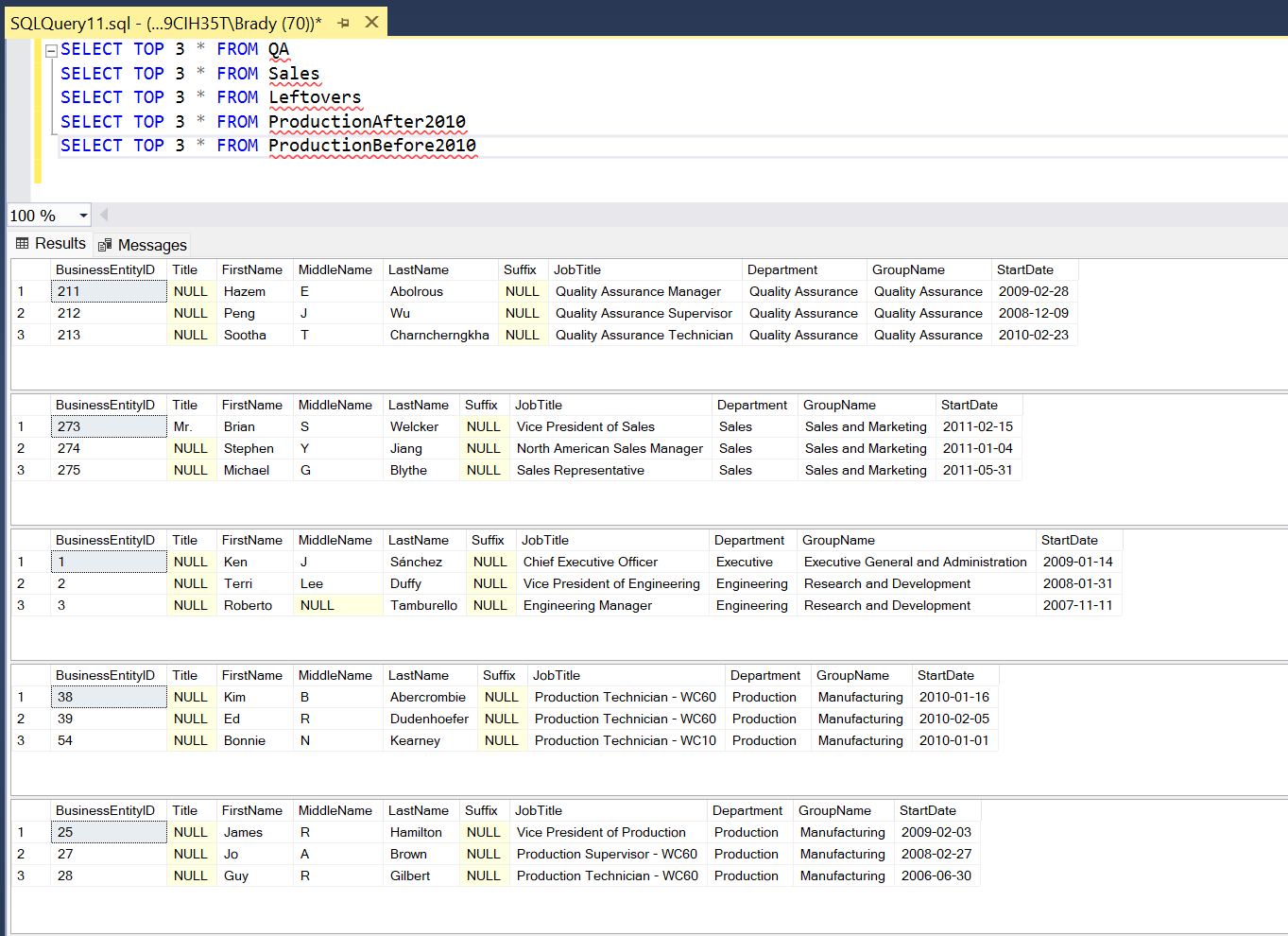

- Try to avoid SELECT * :

- While it’s tempting to use

SELECT *to fetch all columns from a table, it can lead to unnecessary data retrieval, increased IO, and slower performance, especially in tables with many columns or large data types. - Instead, specify only the columns you need in the SELECT statement. This reduces the amount of data transferred and processed, leading to faster query execution and reduced network traffic.

- Specifying columns also makes your queries easier to maintain. If the table schema changes (e.g., a column is added or removed), your query is less likely to break or produce unexpected results.

- While it’s tempting to use

- Use Proper JOINs:

- Understanding and using the correct type of JOINs in your queries is crucial for performance. The most commonly used JOINs are INNER JOIN, LEFT JOIN, and RIGHT JOIN, each serving different purposes.

- INNER JOIN: Returns only the rows where there is a match in both joined tables. This is the most efficient JOIN type in most cases.

- LEFT JOIN (or LEFT OUTER JOIN): Returns all rows from the left table and matched rows from the right table, with NULLs for non-matching rows. While useful, LEFT JOINs can be more resource-intensive, especially if the right table is large and has many unmatched rows.

- RIGHT JOIN: Similar to LEFT JOIN, but returns all rows from the right table and matched rows from the left. It is less commonly used and can often be rewritten as a LEFT JOIN by reversing the order of the tables.

- CROSS JOIN: Returns the Cartesian product of the two tables, which means every row in the first table is combined with every row in the second table. This JOIN type is usually not recommended unless you explicitly need all possible combinations, as it can result in an extremely large dataset and high resource usage.

- Ensure that you have appropriate indexes on the columns used in the JOIN conditions to prevent full table scans.

- Leverage Query Hints Carefully:

- SQL Server provides query hints that can override the default behavior of the query optimizer. While these can be powerful tools in specific scenarios, they should be used cautiously, as they can sometimes force suboptimal execution plans.

- FORCESEEK: Forces SQL Server to use an index seek instead of a scan. This hint can be helpful when you know an index exists and should be used, but SQL Server is choosing a scan instead.

- NOLOCK: Allows reading uncommitted data (dirty reads). This hint can improve performance in scenarios where slight inaccuracies are acceptable, but it should be used with caution as it can lead to inconsistent results.

- MAXDOP: Controls the maximum degree of parallelism for a query, limiting how many CPU cores can be used. This can be useful for balancing query performance with overall server workload.

- Use query hints as a last resort after ensuring that the query structure and indexes are optimized.

- Optimize Subqueries and CTEs:

- Subqueries and Common Table Expressions (CTEs) are powerful tools in SQL but can be performance traps if not used wisely.

- Subqueries: These can be used in SELECT, WHERE, or FROM clauses. While they are convenient, subqueries in the WHERE clause (known as correlated subqueries) can significantly slow down query performance, as they are often executed for every row in the outer query. Whenever possible, refactor correlated subqueries into JOINs or use EXISTS instead of IN to check for the existence of rows.

- CTEs: CTEs can make complex queries easier to read and maintain, but they are not inherently optimized. Treat CTEs as you would any other subquery: be mindful of their performance impact, especially in recursive CTEs, which can be resource-intensive.

- For both subqueries and CTEs, consider whether the same logic could be implemented using derived tables, JOINs, or even temporary tables, which can sometimes offer better performance.

- Parameterize Queries:

- Parameterized queries allow you to reuse execution plans, improving performance and reducing the risk of SQL injection attacks.

- Avoid using dynamic SQL, where queries are built as strings with hard-coded values. Dynamic SQL not only makes queries more prone to SQL injection but also prevents SQL Server from caching execution plans, leading to slower performance.

- Use parameters to pass values into your queries. SQL Server can cache the execution plan for parameterized queries, leading to faster subsequent executions.

- Manage Temporary Objects Wisely:

- Temporary tables, table variables, and Common Table Expressions (CTEs) are often used to store intermediate results within a query. Each has its performance implications, and choosing the right one is critical.

- Temporary Tables: These are physically created in the TempDB database and can be indexed, making them suitable for storing large result sets or performing complex operations. However, they can be slower to create and access due to disk IO.

- Table Variables: Stored in memory, table variables are faster for small datasets but lack statistics, which can lead to suboptimal execution plans. They are a good choice when dealing with small, temporary datasets.

- CTEs: As mentioned earlier, CTEs are great for improving query readability but should be used carefully. They do not persist results, meaning the underlying query is re-executed every time the CTE is referenced, potentially leading to performance issues with complex CTEs.

- Choose the appropriate temporary object based on the size of your dataset and the complexity of your operations. Monitor the performance impact of these objects using tools like SQL Profiler or execution plans.

- Optimize Sorting and Filtering:

- Sorting and filtering data can be resource-intensive, especially on large datasets. To optimize these operations:

- Indexes: Ensure that your indexes support the sort and filter operations. For example, if you frequently order by a particular column, consider creating an index on that column.

- WHERE Clauses: Be mindful of the conditions in your WHERE clauses. Use indexes to avoid full table scans, and consider using EXISTS instead of IN when checking for the existence of rows, as it often provides better performance.

- GROUP BY and HAVING: GROUP BY can be expensive, especially when used with large datasets. Ensure that the columns used in GROUP BY are indexed, and avoid using HAVING unless absolutely necessary, as it filters data after the aggregation, leading to additional processing.

- Limit the Use of Cursors:

- Cursors allow you to iterate through rows one at a time, but they can be slow and resource-intensive, as they process each row individually rather than in sets.

- Whenever possible, replace cursors with set-based operations, which are far more efficient in SQL Server. For example, use a single UPDATE statement to modify multiple rows instead of iterating through rows with a cursor.

Conclusion

Optimizing SQL queries is an essential skill for any SQL Server professional. By writing efficient queries, you can significantly reduce the load on your database, speed up data retrieval, and improve the overall performance of your applications. Following these best practices for query optimization will help ensure that your SQL Server databases operate at peak efficiency, providing fast and reliable access to the data your business depends on.

of SQL Freelancer

of SQL Freelancer